20 February 2024

The existence of GenerativeAI itself has been raising a lot of concerns about how that tool can be used unethically and what the potential risks are. Generative AI cannot autonomously decide whether something is unethical (whatever that means without a specific context), as it only responds to cues given by its user. Even if we teach AI to specifically ignore commands with words suggesting that the outcome might be unethical, depending on the natural language skills of the user the phrase in question can be put in other words with the result being the same.

WHO IS RESPONSIBLE FOR THE OUTCOME?

Since GenAI is neither conscious nor can it be sued, it’s obvious that someone else should be responsible for the consequences of unethical GenAI use. The question is – who? The person who created the cue, the person who shared the content, the person responsible for the creation or implementation of a specific GenAI tool in a specific organisation?

The question is not new actually, because it has been raised before in the context of autonomous cars causing accidents. On March 18, 2018, Elaine Herzberg was hit and consequently killed by a self-driving Uber in Arizona. The automated car was capable of detecting cars and certain obstacles that would naturally happen to be on the road, but didn’t “understand” or “see” a person in the road which led to the accident. This incident brought up the matter of whether the driver, pedestrian, the car company, or the government should be held responsible for situations (and deaths) like this one.

GenAI is quite similar in that matter – humans can be hurt in the process, which is one thing, the other one is however who should be responsible for the outcome. Legally it’s still to be decided.

CAN GENAI UNDERSTAND ETHICS?

In theory – yes, if the tool is taught different models and theoretical approaches to ethics. It’s a completely different question, however, whether it will be able to (or should) refuse to do something morally grey. Also – creating something through GenAI and distributing it are two different acts, even when we talk about content that’s morally grey.

There are two approaches proposed to teach smart machines (in general) how to deal with ethical dilemmas. One of them is based on the assumption that GenAI should learn based on the reaction of its users, while the other one assumes it should be taught particular moral rules of philosophical systems. Both of those strategies don’t seem to be mistakes-proof which is the real problem.

Moreover, when EU decided on its guidelines on ethics in AI they were discussing tools created to help optimize decision-making processes – like recruitment. It was stated that such operations should be supported by AI, but the final decision should be made by a human interpreting the outcome and deciding how to deal with the data. The point was – that no machine can be in full control.

GenAI is different in that matter because it gets the cues but later generates whatever fits those cues. If the user asks for something indecent, GenAI will do specifically what it was asked to do.

GENAI DILEMMAS IN BUSINESS ENVIRONMENT

In a corporate environment, we can expect more phishing, custom lures used in chats and videos, or deep fakes.

The problem with GenAI is that tey use given data (and don’t really differentiate what is sensitive) and create new data, which can be vulnerable to bias, poor quality, unauthorized access, and loss. GenAI will just create, without analyzing potential risks.

Therefore employees entering sensitive data into public generative AI models are already creating a significant problem. GenAI can store input information indefinitely and use it to train other models, so once you put something in there, it’s there forever.

Training people seems to be the most effective way to make sure GenAI is used ethically.

THE PROBLEM WITH AI PRINCIPLES

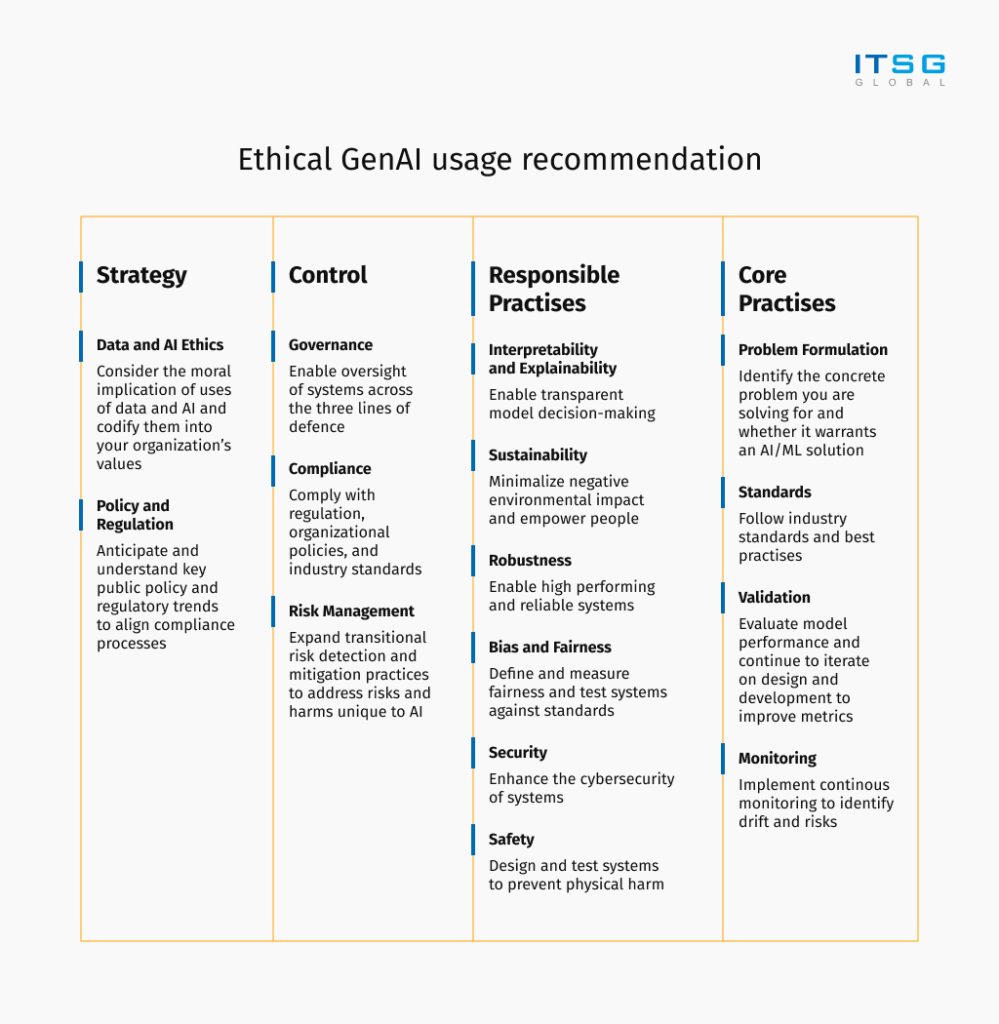

AI principles were created before GenAI became the next best thing. The original 5 AI principles were: fairness and bias, trust and transparency, accountability, social benefit, and privacy and security.

Those principles were already quite abstract before GenAI and now there are even more questions about them than before. The point is – AI will accompany us into the future, so ethical questions have to be raised and actions have to be taken as new issues arise.

Even though humans are the ones teaching GenAI how to work, GenAI will teach us a lot about dealing with ethics.

Author: Cezary Dmowski, CTO at ITSG Global

Let’s arrange a call and see how we can help you using the latest GenAI.