Gemma 2: Google’s Game-Changer in AI Efficiency

In the rapidly evolving world of artificial intelligence, Google has made a significant leap forward with its latest model, Gemma 2. This 2-billion parameter AI powerhouse is reshaping our understanding of what’s possible in machine learning, especially when it comes to efficiency and accessibility.

A New Paradigm in AI Development

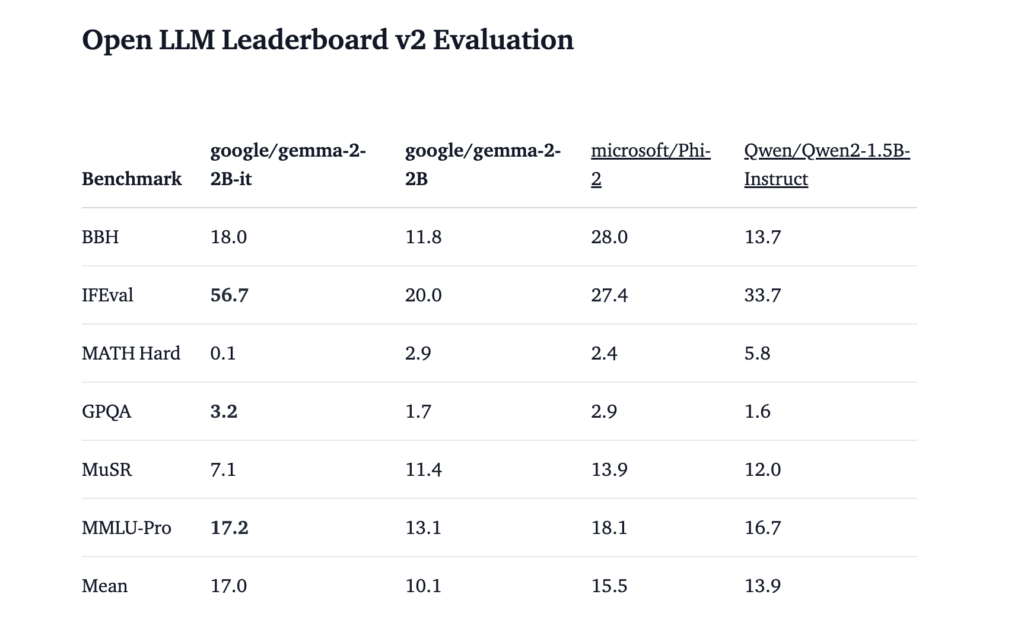

For years, the AI community has operated under the assumption that bigger models inherently meant better performance. Gemma 2 challenges this notion, proving that sometimes, less really is more. With just 2 billion parameters – a fraction of what some larger models boast – Gemma 2 manages to outperform its bulkier counterparts, including some versions of ChatGPT.

This shift towards smaller, more efficient models isn’t just a technical achievement; it’s a game-changer for businesses looking to implement AI solutions without breaking the bank on hardware costs.

Safety First: The Shield Gemma Advantage

In today’s digital landscape, responsible AI deployment is non-negotiable. Gemma 2 addresses this head-on with its Shield Gemma feature. This built-in safety net filters out harmful content, including hate speech, harassment, and explicit material. For businesses, this means peace of mind knowing that AI interactions with customers and employees remain safe and productive.

Transparency Through Gemmascope

One of Gemma 2’s standout features is Gemmascope, a tool that brings unprecedented transparency to AI decision-making. For too long, AI models have been black boxes, leaving users in the dark about how decisions are made. Gemmascope changes this by allowing users to peer into the model’s inner workings, seeing how specific input tokens activate different layers of the model.

This level of transparency is crucial for building trust in AI systems, especially for businesses that need to explain AI-driven decisions to stakeholders or regulatory bodies.

Impressive Performance in a Compact Package

Despite its relatively small size, Gemma 2 packs a punch:

- Trained on an impressive 2 trillion tokens

- Utilizes knowledge distillation from larger models

- Boasts an 8,000-token context window

- Requires only about 1 gigabyte of memory

These specs allow Gemma 2 to process substantial amounts of input data efficiently, making it an attractive option for businesses looking to implement AI solutions without investing in hefty hardware upgrades.

The Human Touch: Refining AI Through User Preferences

The development of models like Gemma 2 isn’t happening in a vacuum. The AI community is increasingly turning to human feedback to refine these systems. Platforms like the Chatbot Arena, which measures human preferences in AI responses, play a crucial role in fine-tuning models. This focus on human preferences isn’t just a trend; it’s essential for creating AI that resonates with users and provides real value in business contexts.

Limitations and the Road Ahead

While Gemma 2 excels in conversational tasks, it faces challenges with certain programming benchmarks. This underscores an important point: even advanced models have their limitations. Regular testing and community feedback are vital for understanding these capabilities and improving performance.

As more companies, including tech giants like Apple, enter the AI market, competition will likely drive further innovations. Understanding and engaging with these smaller, more efficient models not only increases transparency but also improves user engagement with the technology.

Conclusion

Google’s Gemma 2 represents a significant stride in AI technology. Its combination of efficiency, transparency, and safety features makes it a noteworthy player in the evolving AI landscape. As we continue to explore these advancements, engaging with tools like Gemmascope and testing various queries will be key to unlocking the full potential of AI in both technical and business contexts.

The future of AI isn’t just about raw power – it’s about smart, efficient, and responsible implementation. Gemma 2 is leading the charge in this new direction, promising a future where AI is not just powerful, but also accessible, understandable, and trustworthy.