The Quiet Revolution in AI: Prompt Caching

Anthropic has introduced a feature for their Claude models that might not sound exciting at first, but could revolutionize how we build AI applications. It’s called prompt caching, and it promises to cut costs by up to 90% and speed things up by 85%. That’s a significant development in the world of AI.

What is Prompt Caching?

Prompt caching is a method to avoid sending the same information to the AI repeatedly. Instead of repeating yourself every time you interact with Claude, you can cache part of what you want to say. It’s akin to leaving a note for someone instead of explaining the same thing every time you see them.

The Problem It Solves

This might seem like a minor improvement, but it addresses a real issue. AI models like Claude are incredibly powerful, but they’re also expensive to run. Every word sent to them costs money. For applications that frequently communicate with Claude, these costs can quickly accumulate.

How It Works

Here’s a practical example:

Imagine you’re building a chatbot for customer service. You might have a lengthy set of instructions for Claude, including how to act, what tone to use, and what information to provide. Without caching, you’d need to send all of this information every time someone asks a question. With caching, you can send it once and simply refer to it later.

The Benefits

- Cost Savings: Cached tokens only cost 10% of their normal price.

- Increased Speed: Sending less data each time makes everything happen faster.

- Practical Applications: It makes AI more feasible for real-world use cases.

Comparison with Google’s Approach

Google recently introduced a similar feature for their Gemini models, but there are key differences:

- Google requires caching at least 32,000 tokens at once, while Anthropic allows as little as 1,024 tokens.

- Google’s cache lasts for an hour by default, while Anthropic’s lasts for 5 minutes.

These differences make Anthropic’s approach more flexible, especially for applications dealing with shorter text chunks or frequent updates.

Prompt Caching and RAG

It’s important to note that prompt caching isn’t a replacement for techniques like Retrieval-Augmented Generation (RAG). In fact, they can work together:

- RAG can pull relevant information from a large database.

- Caching can efficiently reuse that information across multiple interactions.

This hybrid approach could be particularly powerful for applications handling complex, knowledge-intensive tasks.

Implications for AI Development

The introduction of prompt caching highlights a broader trend in AI development. We’re shifting from simply making models bigger and more powerful to figuring out how to use them efficiently in real-world applications.

Opportunities and Challenges for Developers

For developers, this presents new opportunities and challenges:

- Prompt caching requires careful thought about what to cache, how to structure prompts, and how to manage the cache over time.

- Anthropic’s implementation allows setting multiple cache points within a single prompt, enabling more granular control.

Potential Applications

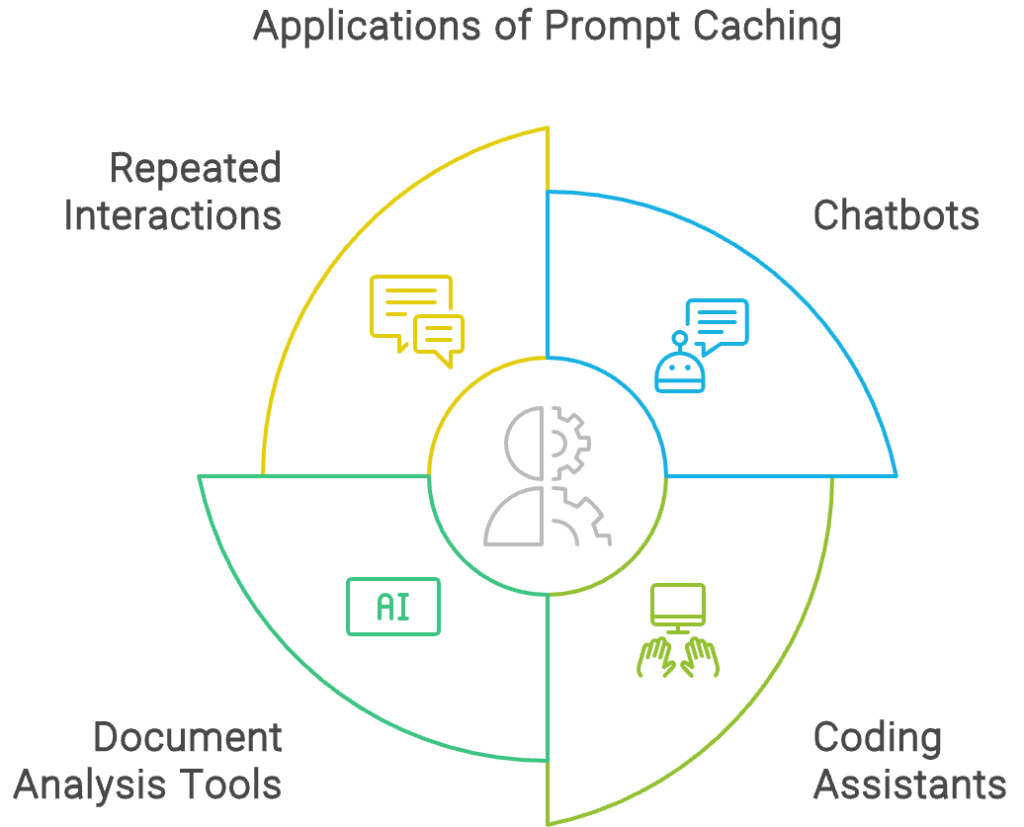

The applications are wide-ranging:

- Chatbots

- Coding assistants

- Document analysis tools

- Any application involving repeated interactions with an AI model

Limitations to Consider

While promising, prompt caching has its limitations:

- The 5-minute cache lifetime in Anthropic’s implementation may not suit all use cases.

- Cost savings, while significant, are not unlimited.

Looking Ahead

As with any new technology, it will take time for best practices to emerge. Developers will need to experiment, measure results, and share their learnings.

Prompt caching is a small feature with potentially big implications. It’s not going to revolutionize AI overnight, but it’s the kind of incremental improvement that can dramatically expand what’s possible over time. It’s a sign that the field of AI is maturing, moving from raw capability to practical application.