7 September 2023

It’s hard to call ChatGPT a novelty, because of its massive success and many active users, but that’s exactly why someone finally asked what’s going to happen to the model, once it’s going to use content that has already been generated by the model’s users. Or, to put it simply, can it collapse because of that?

Large Language Models can be trained both ways – on human-generated data or machine-generated data. Ideally, when they are trained on human-generated data the outcome might not be particularly creative, but there will be traces of human thought. Authors of the “The Curse of Recursion: Training on Generated Data Makes Models Forget” study figured that at some point ChatGPT will be learning from its own output and that’s more than just plagiarizing.

What is degradation? Models degenerate when generative AI models train on a content that was delivered by another AI which leads to collapse.

Models are trained by processing giving information, even when unstructured, and looking for alternative ways of presentation. When the collapse begins those models start to lose some information that happen to be less common but still relevant. That gives continuously less diverse outcome.

Generative AI models should be trained on human-produced data to function. When trained on synthetic data, new models will become defective and homogenous over time.

As a result the new models are dependent on patterns that already existed in the previously generated data and simply replicate them.

It was noted that as the number of synthetic content grows “major degradation happens within just a few iterations, even when some of the original data is preserved” and “errors from optimization imperfections, limited models and finite data ultimately cause synthetic data to be of low(er) quality. Over time mistakes compound and ultimately force models that learn from generated data to misperceive reality even further”.

The collapse means, that over time the model loses the capability to understand the concept of minorities and at some point, it’s going to completely lose parts of the originally given information. It can be controlled by making sure that minority groups are constantly represented in different outcomes, but this requires some sort of human control over generated texts especially if they are going to be used by the model to create new texts.

It’s difficult to say however at what stage of the collapse we are currently at. ChatGPT alone can create content for thousands of people simultaneously and those texts can be used virtually everywhere, also in scientific papers. Not to mention the marketing and sales content being created quicker than ever and delivered through many different platforms. While before some creativity was necessary, now prompts are enough for the marketing team to deliver a stream of content. It has to be understood that even though the order of words might be changed almost indefinitely the issue remains – those texts are something between plagiarism and saying the same things over and over again. Do they solve any problem of any business? Not really.

How come? At some point, readers or just Internet users will notice the difference and begin to value high-quality content, the same way as handmade art has more value than cheap copies bought for a few cents. It has been noticed before that great content cannot be recreated – copying something viral will not make the outcome go viral if the unique and thought-provoking factor is missing.

Similar observations apply to science – “ChatGPT is not just a running start toward a better essay. It is a substitute for the generative idea process that makes a great mind. It is degenerative in the sense that it undermines the growth that comes from spending time reflecting, ostensibly to free up more time to produce a product.” When it’s put that way it’s almost like nobody is expecting ChatGPT to generate something great – it’s degenerative by definition, yet it’s giving more time to other goals.

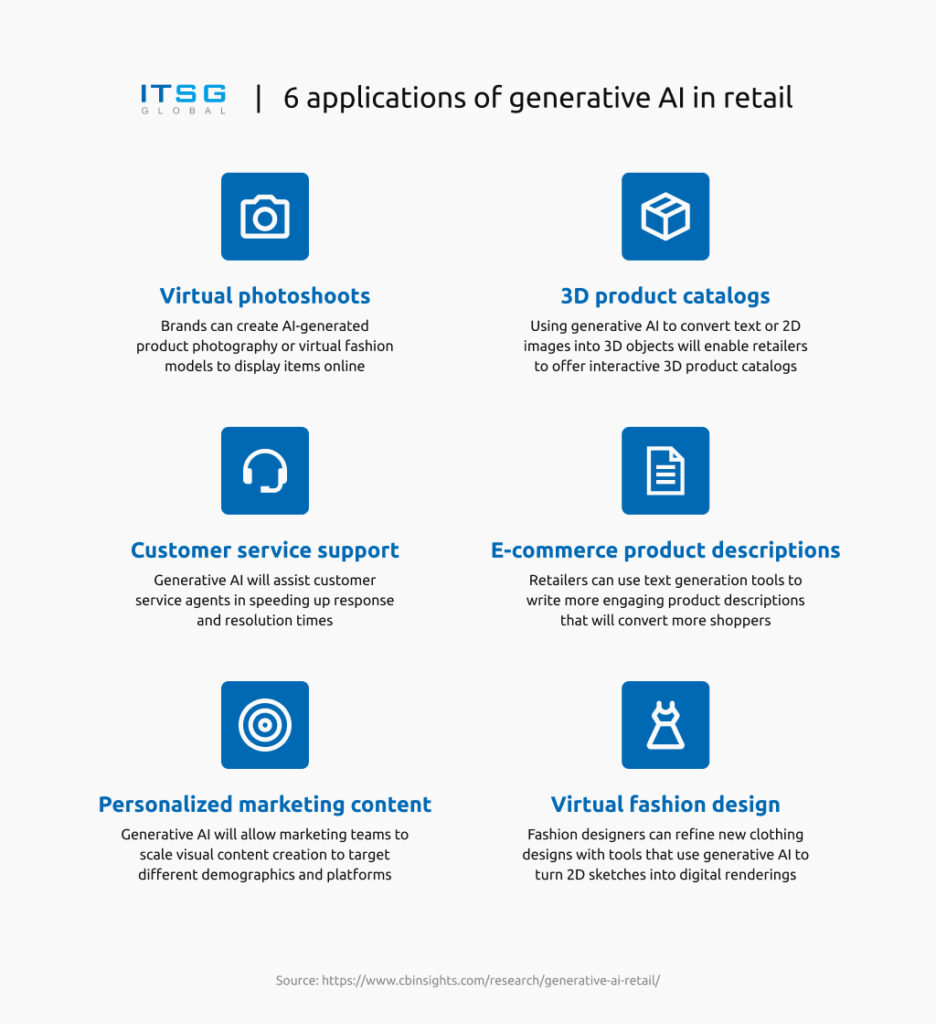

And it’s important to notice that the most popular applications of generative AI for business are not necessarily about creativity and more about effective customer service and showing off our products.

I wouldn’t say however that the degenerative part is getting worse – it’s simply becoming more noticeable because it’s using biased datasets and those biases are getting more visible.

And because people expect more and more from ChatGPT and other LLMs those are going to fail them more often. And if it’s going to bring more attention to human value in the creative process – then I don’t really see any harm in that.

Author: Cezary Dmowski, CTO, Co-owner at ITSG Global